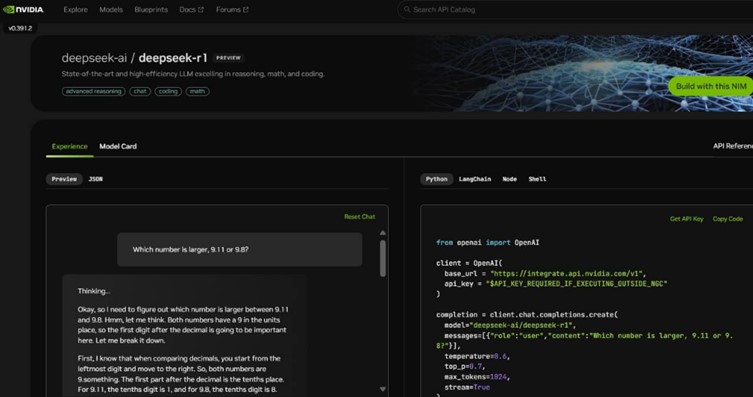

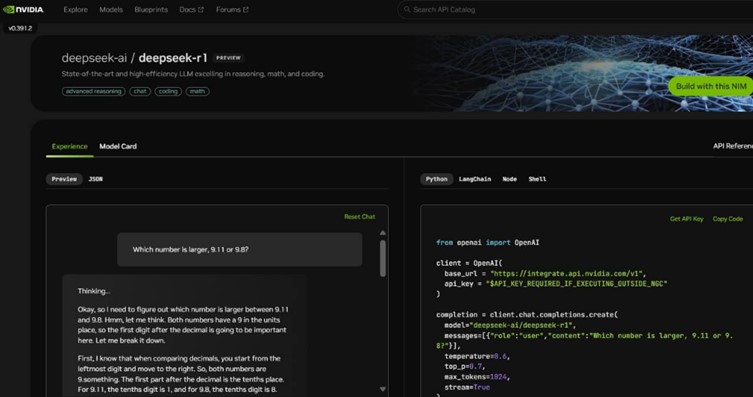

▲엔비디아 NIM에서 딥시크 R1 지원 / (이미지:엔비디아)

R1, NIM 마이크로서비스 프리뷰로 제공

엔비디아가 지난 3일 엔비디아 NIM에서 딥시크(DeepSeek) R1의 지원을 시작한다고 3일 발표했다.

딥시크 R1은 최첨단 추론 기능을 갖춘 오픈 모델로 딥시크 R1과 같은 추론 모델은 직접적인 답변을 제공하는 대신 쿼리에 대해 여러 번의 추론 패스(Inference Passes)를 수행해 연쇄 사고, 합의, 검색 방법을 거쳐 최상의 답변을 생성한다.

R1은 이 스케일링 법칙의 완벽한 예로, 에이전틱 AI(Agentic AI) 추론의 요구 사항에서 가속 컴퓨팅의 중요성을 보여주며, 모델이 문제를 반복적으로 ‘사고’할 수 있게 되면 더 많은 출력 토큰과 더 긴 생성 주기가 생성돼 모델 품질이 계속 확장된다.

이와 같은 추론 모델에서 실시간 추론과 고품질 응답을 모두 구현하려면 상당한 테스트 타임 컴퓨팅이 중요하다. 개발자가 이러한 기능을 안전하게 실험하고 자신만의 전문 에이전트를 구축할 수 있도록 6,710억 개의 파라미터로 구성된 딥시크 R1 모델은 현재 엔비디아 NIM 마이크로서비스 프리뷰로 제공되고 있다.

딥시크 R1 NIM 마이크로서비스는 단일 엔비디아 HGX H200 시스템에서 초당 최대 3,872개의 토큰을 전송할 수 있다. 개발자들은 이제 애플리케이션 프로그래밍 인터페이스(API)를 테스트하고 실험할 수 있으며, 이는 엔비디아 AI 엔터프라이즈 소프트웨어 플랫폼의 일부인 NIM 마이크로서비스로 제공될 예정이다.

또한, 기업은 엔비디아 네모(NeMo) 소프트웨어와 함께 엔비디아 AI 파운드리를 사용해 특별한 AI 에이전트를 위한 맞춤형 딥시크-R1 NIM 마이크로서비스를 생성할 수 있다.