국내외 챗GPT에 대한 관심이 높아지는 가운데 반도체 업계에서도 챗GPT가 △GPU △서버 △메모리 등 반도체 수요를 견인할 것으로 전망해 향후 생성AI와 대규모 인공지능 모델이 이끌 시장이 주목받고 있다.

엔비디아 GPU, 챗GPT 수혜…AMD도 서버칩 연속 출시

“GPT4 조 단위 파라미터, 메모리 高용량·高속·저전력必”

국내외 챗GPT에 대한 관심이 높아지는 가운데 반도체 업계에서도 챗GPT가 △GPU △서버 △메모리 등 반도체 수요를 견인할 것으로 전망해 향후 생성AI와 대규모 인공지능 모델이 이끌 시장이 주목받고 있다.

챗GPT에 대한 국내외 관심도가 가파르게 상승 중이다. 2달 만에 전세계 사용자 수 1억명을 달성했다는 챗GPT는 국내 기준 네이버 검색 트렌드에서 ‘챗 GPT’ 혹은 ‘Chatgpt’의 검색량이 12월 대비 각각 8,428%와 1,424% 증가한 것으로 확인됐다. 전세계적으로도 챗GPT 구글 검색량은 2월 동안 가장 높은 수치인 100에 달하며 지난 11월 0~2이던 것에 대비해 상당히 높은 관심을 보이고 있는 것으로 나타났다.

■ 챗GPT 상용화에 GPU 3만개 투입 추정

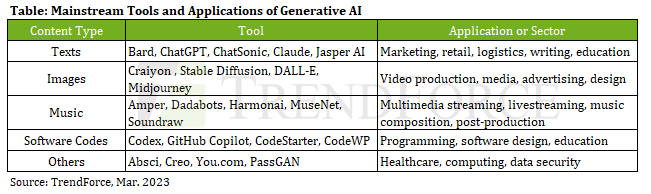

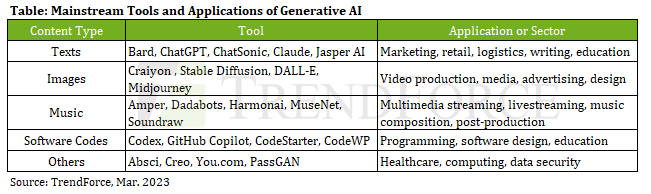

▲생성AI 주요 도구와 활용 분야(자료:트렌드포스)

최근 시장조사기관 트렌드포스에 따르면 챗GPT가 상용화를 준비하면서 GPU 수요가 엔비디아의 A100 GPU를 기준으로 3만개에 이를 것으로 추정했다.

트렌드포스는 2020년 챗GPT의 모델 개발에 사용되는 학습 파라미터가 1,800억개로 증가하며 학습 데이터를 처리하는 데 필요한 GPU 수가 약 2만개였다고 언급하며 향후 상용화에 필요한 GPU수는 더욱 증가한 3만개에 이를 것으로 예상했다.

이에 따라 생성AI가 트렌드로 자리잡아 갈수록 GPU 수요는 크게 증가해 관련 공급망 업체들의 이익 증가가 예상된다. 특히 엔비디아는 가장 큰 시장 주목도를 받고 있는데 이 또한 챗GPT의 흥행 기대에 따른 AI 개발 수혜가 가장 클 것으로 예상되기 때문이다.

AI 워크로드인 엔비디아 DGX A100 텐서코어 80GB는 5페타플롭스(PetaFlops, 1초당 5,000조번 연산)의 성능을 제공하며 빅데이터 분석과 AI 가속의 선택지를 제공했다. 더불어 AMD도 AI 기반 애플리케이션인 인스팅트 MI100∼300 시리즈를 연속적으로 출시하며 서버 시장을 공략함과 동시에 최근 CES 기조 연설에서 리사 수 CEO가 챗GPT를 언급하며 “훈련시간을 몇 달에서 몇 주로 단축시켜 수백만 달러의 에너지 절감 효과를 가져올 수 있다”고 말한 바 있다.

트렌드포스는 관련 공급망에서 TSMC가 고급 컴퓨팅 칩 주요 파운드리로서 핵심 역할을 수행할 것이라고 주장했다. 현재 TSMC는 엔비디아 칩 생산의 주요 공장으로서 첨단 노드 및 칩렛 패키징 등에 확고한 입지를 가지고 있다. 더불어 ABF(Ajinomoto Build-up Film)기판의 새로운 수요와 AI칩 개발사들의 혜택이 예상되고 있다.

이승우 유진투자증권 리서치센터장은 반도체발전전략포럼에서 챗GPT를 평하며 “인공지능 서비스가 얼마나 확대되느냐에 따라 연산 수요와 서버 증설 수요가 증가하게 될 것”이라고 전망했으나 중요한 것은 사용자 수 증가라고 분석했다.

그는 “현존 세계 1위 슈퍼컴퓨터인 Frontir의 연산속도가 1.1엑사플롭스(1초당 110경2,000조 연산)인데 이를 GPT-3 학습에 약 3.5일 소요된다”고 언급하며 1번의 트랜잭션에 들어가는 비용이 평균 약 2센트 수준이며, 예컨대 하루 3,000만명이 하루 평균 10개 질문을 하면 3억개의 트랜잭션이 발생하고 이것은 1일 600만달러(약 80억원)의 연산 비용을 필요로 하게 된다고 분석했다.

이 센터장은 “AI 학습 그 자체는 수요 증가에 크게 변수가 되지 않는다”며 “중요한 것은 학습이 아니라 이용자 수 증가에 따른 트래픽이며 이는 서버 증설 및 모델 구축 등에 칩 수요 견인을 이끌 수 있다”고 덧붙였다.

■ 챗GPT4, 메모리 하이퍼스케일링 요구

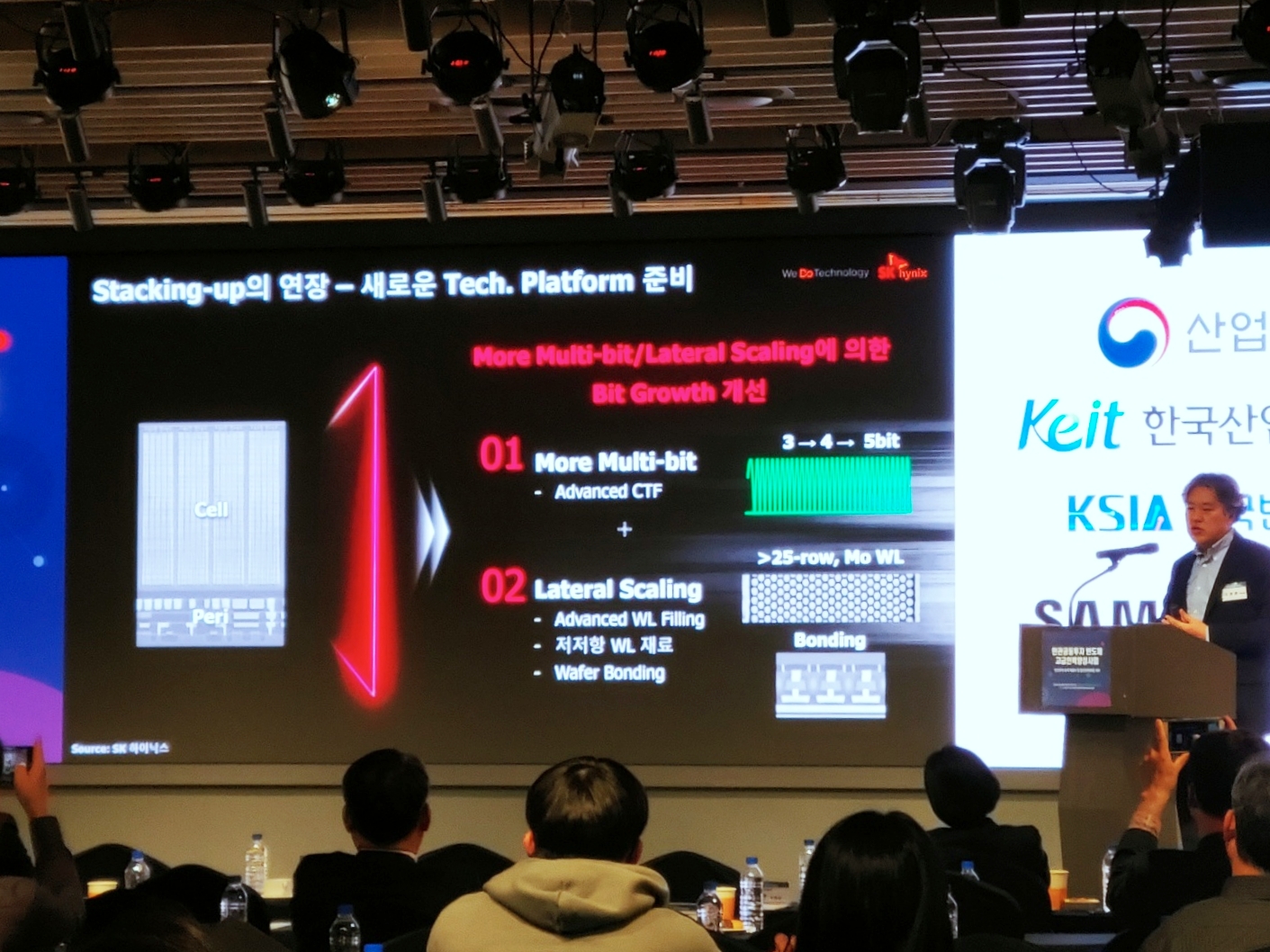

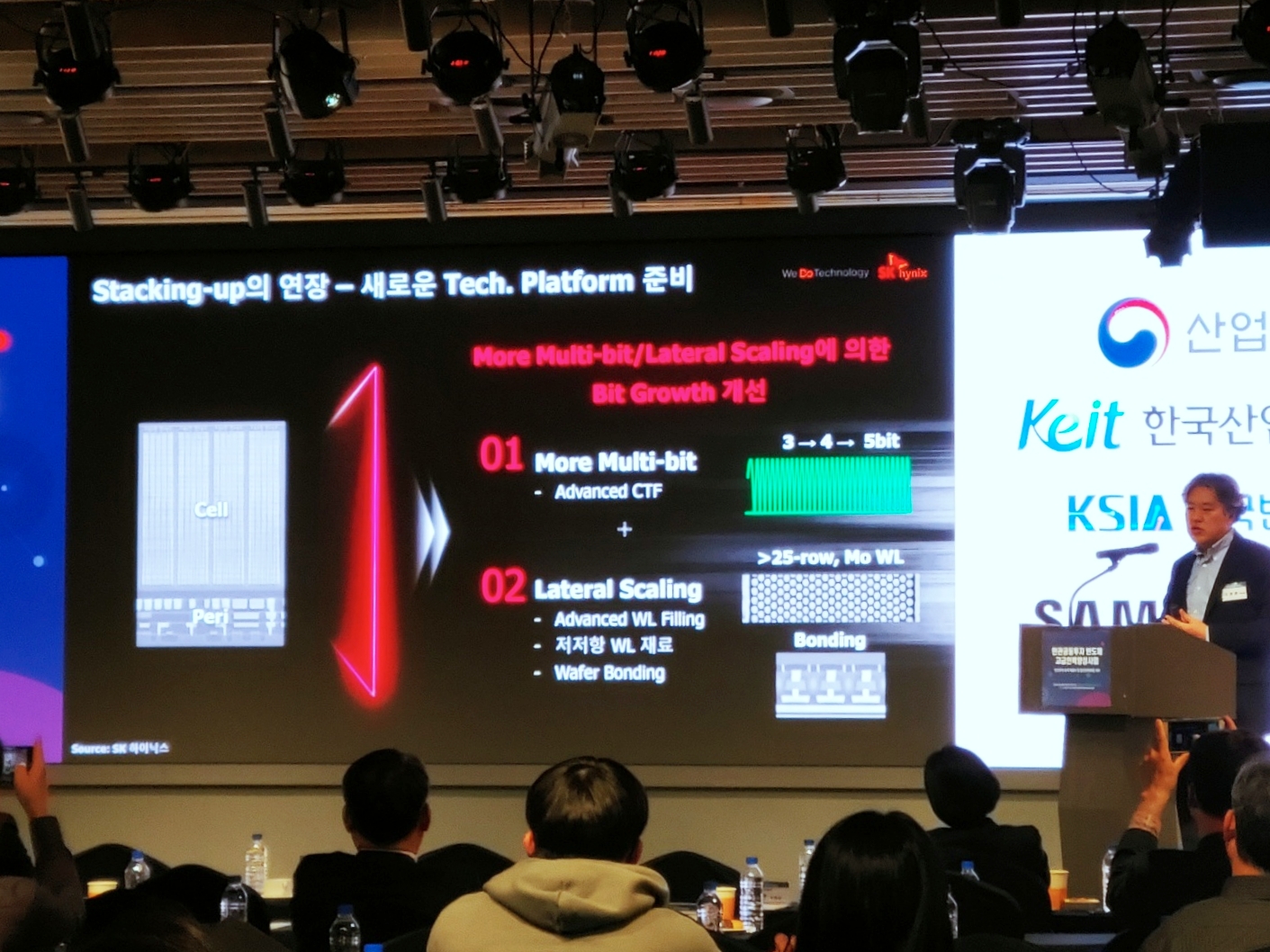

▲반도체발전전략포럼서 이성훈 SK하이닉스 부사장이 발표하는 모습

“GPT4가 실제로 상용화되면 데이터 처리 속도와 용량의 증가는 가히 어마어마할 것이라고 전망한다”

이성훈 SK하이닉스 미래기술연구원 부사장은 지난 반도체발전전략포럼에서 이렇게 말한 바 있다.

챗GPT-3.5가 파라미터 1,750억개로 전세계에 충격을 준 데 이어 앞으로 다가올 4.0버전은 파라미터가 1조~100조개에 달할 것으로 전해지고 있어 업계 및 사용자들의 기대감이 한층 커지고 있다.

국내외 반도체업계도 챗GPT에 주목하고 있다. 5G·6G 인프라를 통한 막대한 데이터의 초고속 무선 전송과 자율주행·커넥티드카에 이은 챗GPT발 AI 대충격까지 기본적으로 데이터의 고용량·초고속· 등 메모리의 하이퍼스케일링을 요구하고 있다.

더불어 챗GPT를 개발·운영하게 될 주요 검색 엔진의 공급자인 △마이크로소프트 △구글 △네이버 등에선 ESG 기조와 에너지 사용 비용 감소를 위해 저전력 솔루션에 대한 요구도 함께 증가할 수밖에 없다.

이성훈 부사장은 “결국 메모리의 가치는 △하이퍼스케일링 스토리지 △하이 스피드 △극단적으로 전력 소비를 줄이는 솔루션”에 있다며 향후 전망에서 D램은 “스케일링의 한계 극복을 위해선 △패터닝 미세화 △셀 커패시터의 증가 △인터커넥션에서의 접합부 저항에 대한 개선이 필요하다”고 덧붙였다.

올 상반기 메모리 수요 급감으로 적자폭이 커질 것으로 예상되는 가운데 챗GPT 흥행에 따른 메모리·서버·AI연산 프로세서 등의 수요 견인이 이뤄질 것인지 귀추가 주목되고 있다.